Minseo Kim

Undergraduate Student, CSE, Seoul National University

Hi, I’m an undergraduate student at Seoul National University majoring in Computer Science and Engineering. My research focuses on improving the efficiency of large-scale AI models grounded in a deep understanding of systems. I aim to achieve this by developing system-aware algorithmic methods and cross-stack designs that enable efficient training and serving in real-world deployments. Currently, I am working on inference efficiency for large language models (LLMs) and diffusion language models (DLMs).

During my undergraduate years, I have been fortunate to be part of two great research groups. I am currently a visiting researcher in the Pallas Lab at Berkeley AI Research (BAIR), advised by Prof. Kurt Keutzer and Dr. Amir Gholami. Previously, I worked in the Architecture and Code Optimization Lab (ARC Lab) at Seoul National University, advised by Prof. Jae W. Lee.

I am seeking a PhD position starting in Fall 2026.

news

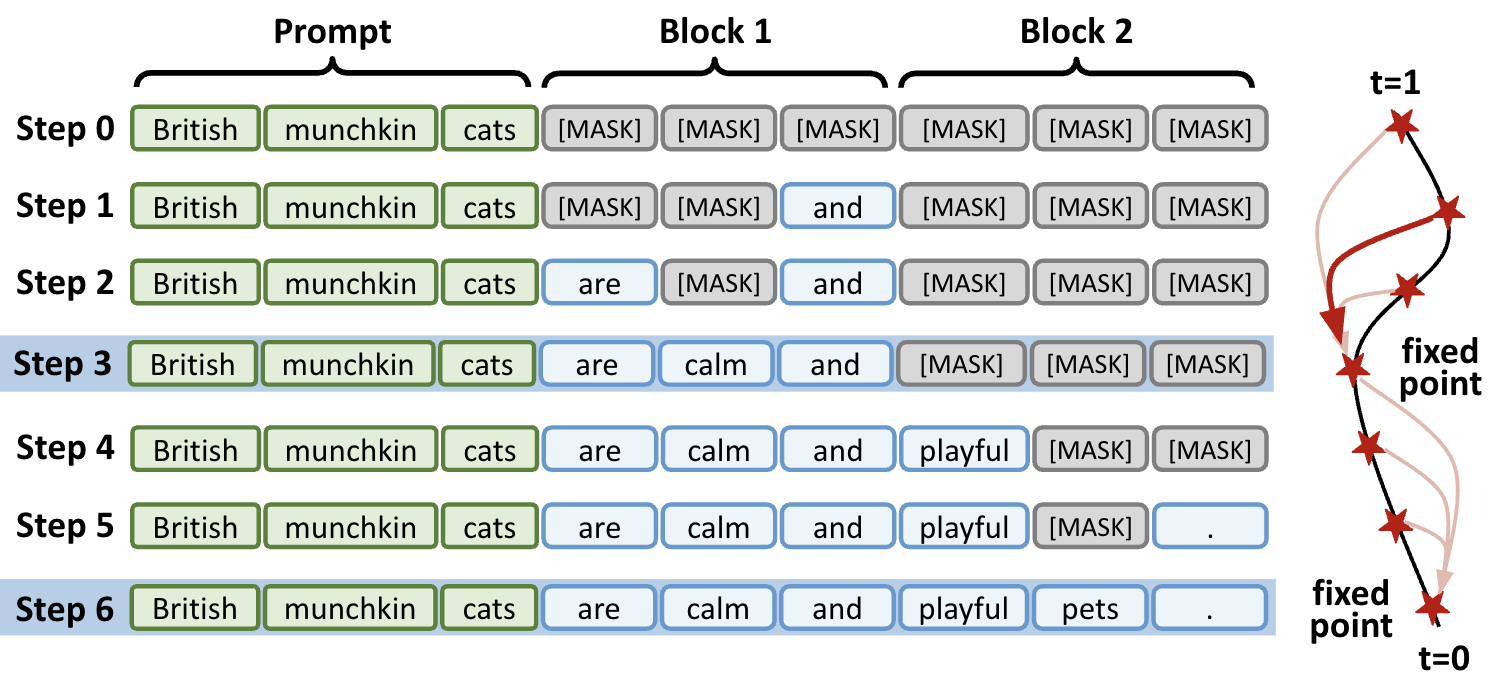

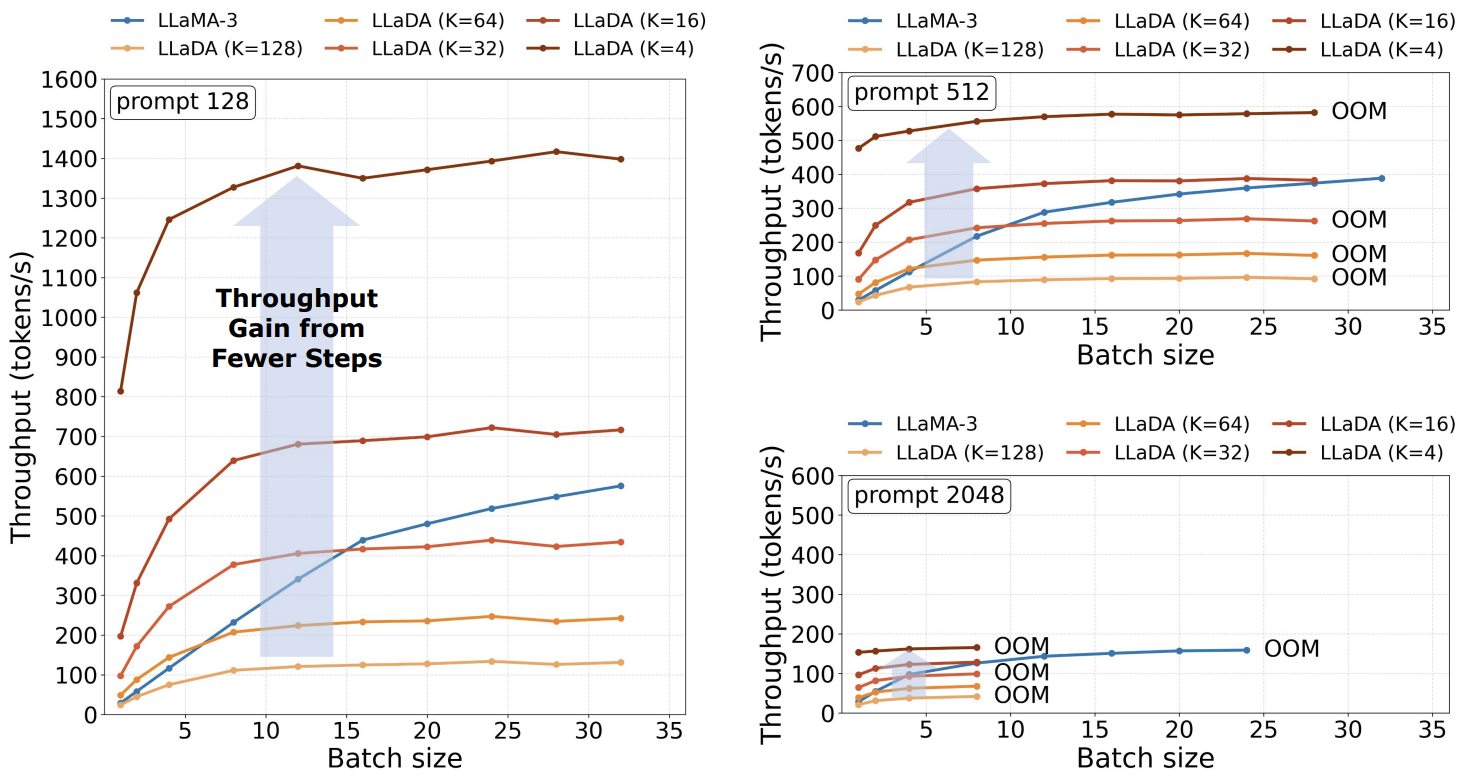

| Nov 24, 2025 | Our paper on accelerating DLM inference via fine-tuning is now on arXiv. Huge thanks to my collaborators - Chenfeng, Coleman, and Harman! [Link] |

|---|---|

| Nov 14, 2025 | Team Architects won the Grand Prize (NIPA President’s Award) at the 2025 AI Chip Contest! |

| Oct 07, 2025 | Our paper on DLM analysis is now on arXiv. [Link] This is my first paper at Berkeley! |

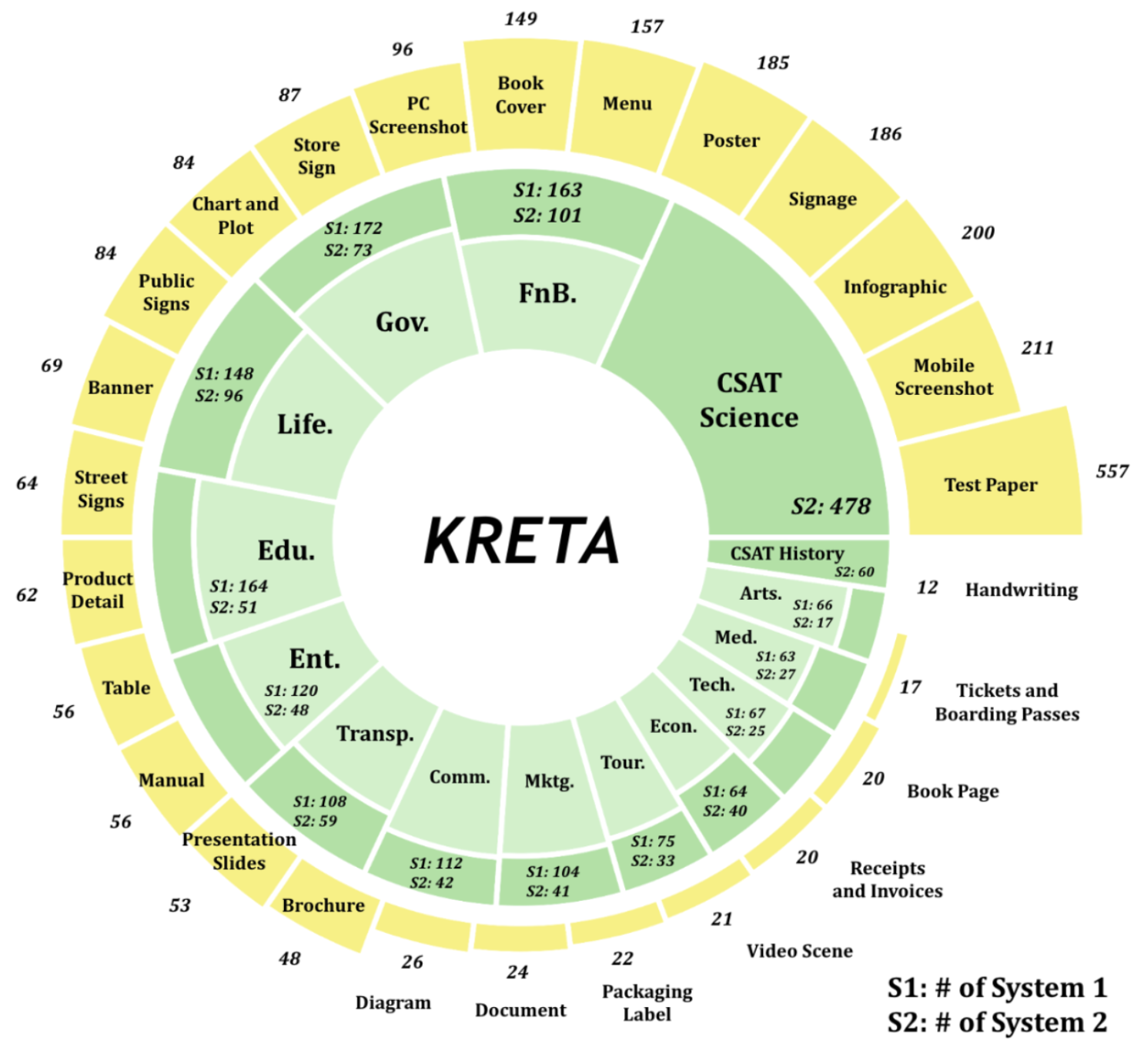

| Aug 21, 2025 | Our VLM team at AttentionX had a paper accepted to EMNLP 2025! I’ll be presenting it in Suzhou, China (Nov 5-7). [Link] |

selected publications

-

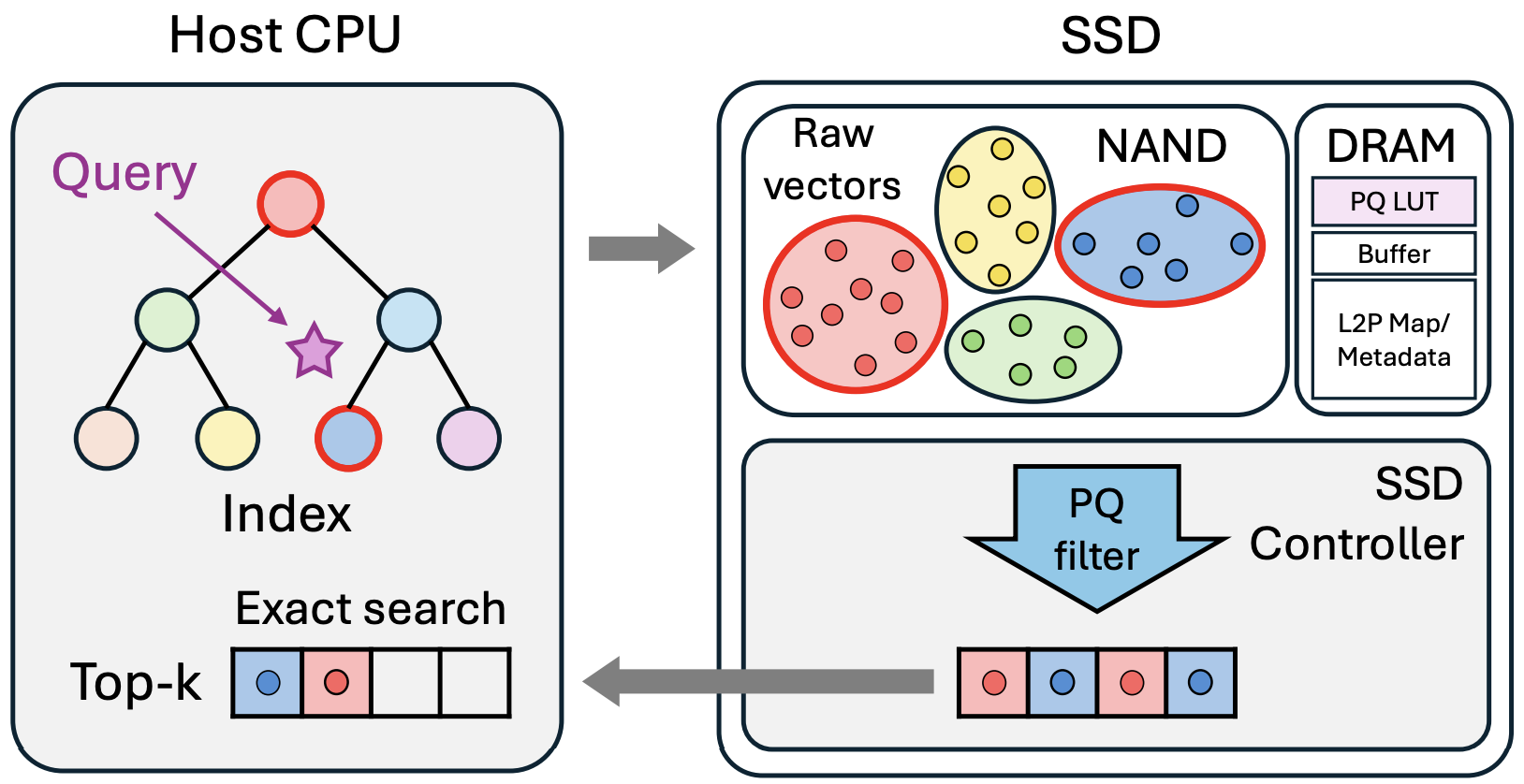

Beyond Next-Token Prediction: A Performance Characterization of Diffusion versus Autoregressive Language ModelsarXiv Preprint , 2025

Beyond Next-Token Prediction: A Performance Characterization of Diffusion versus Autoregressive Language ModelsarXiv Preprint , 2025